- Trust is critical for a healthy corporate culture, but building and maintaining trust has become even more challenging in the digital age.

- Employees are optimistic about AI, but they need to trust that their leaders have good intentions with it.

- To avoid damage to corporate culture, employee engagement and morale, executive teams need to make building and maintaining trust part of the technology strategy.

Trust is one of the hallmarks of a healthy corporate culture. But even leaders at organizations with some of the strongest corporate cultures admit that developing and maintaining trust in senior leadership is an ongoing problem. With the rise of the digital age, that problem is only getting more challenging.

Leaders around the globe realize this is a growing problem. In PwC’s “20 Years Inside the Mind of the CEO” study, two out of three CEOs said that it’s harder for business to sustain trust in the digital age. They also said they believe artificial intelligence (AI) and automation will affect trust levels in the future.

As the move to automate more areas of the business picks up steam, there’s an increased urgency to face these issues and make trust-building a leadership priority. As our recent study on preparing people for success in the era of AI revealed, trust in senior leadership is one of the three primary factors—along with transparency and confidence in their skills to transition—that will make people feel more positive about AI in the workplace.

Let’s take a closer look at why trust is so critical.

Why the Implementation and Use of AI Matters

While our study found that people are generally optimistic about AI—84% expect that AI’s impact on the way we work and live will be positive—it’s important to look at where that enthusiasm comes from. Overall, 70% said they would feel positive about the potential to turn over the routine tasks of their job to AI so that they can focus on more meaningful work. This means they need to trust that their leaders are going to have good intentions with how they implement AI.

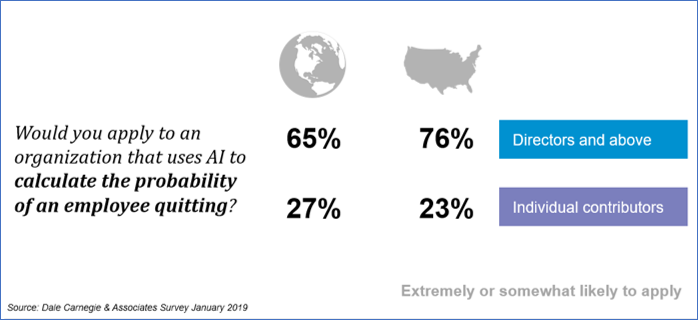

That concern shows up in the data in a variety of ways. For example, when we asked respondents if they would apply to an organization that uses AI to monitor their activities, only 32% of individual contributors said they’d be extremely or somewhat likely to apply. Additionally, this use of AI could lead to higher turnover, lowered productivity and disengagement from your current employees.

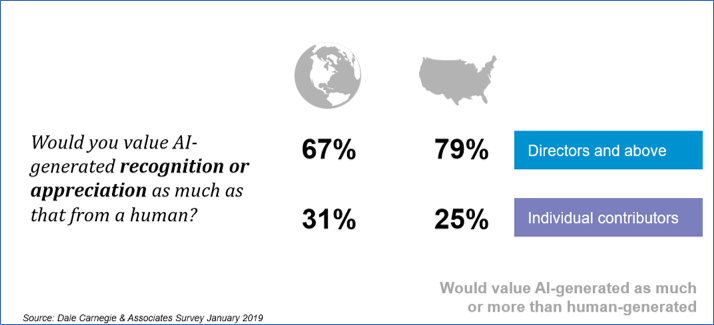

We also asked individual contributors how likely they would be to trust and accept a performance appraisal conducted by AI. When we specified that the criteria wouldn’t be fully transparent—which is most likely to be the case—only 32% said they’d be willing to accept the appraisal. And about 7 in 10 of the individual contributors said they would value AI-generated recognition or appreciation less than recognition from a human or not at all.

The Leadership Trust Disconnect

The leaders in our study are clearly concerned about the possible unintended consequences of AI. At the director level or higher, 76% of respondents in the U.S. said they were at least moderately worried about the potential impact of AI on their organization’s culture.

But when it comes to recognizing the full extent of the trust issue that already exists, many have room for improvement. While more than 7 out of 10 respondents to our survey at the director level and above said they have a “a lot” or “complete” trust in their current leaders to make the right decisions about AI implementations, those numbers drop considerably when you look at the rest of the organization. About half (48%) of middle managers felt the same way, and only 26% of individual contributors expressed a high level of trust in their current leaders.

In fact, respondents at the director level and above were much more open to AI and trusting of leaders’ intentions than others in the organization . For example, 71% of director-level and above respondents said they’d be somewhat or very likely to apply for a position with a company that uses AI to monitor your activities to evaluate and improve productivity. On the other hand, only 32% of individual contributors feel that way.

These gaps could have major consequences for a healthy corporate culture. Imagine the skepticism received when these executive teams discuss AI projects with an audience that questions their motives. The technological deployment could end up doing more harm than good.

How to Include Trust in Your AI Strategy

Building and maintaining trust isn’t easy, but there are some specific steps leaders can take to increase trust so that people will feel more confident about an AI implementation:

- Be honest and consistent in all actions and behaviors: Senior leaders’ actions and decisions must reflect the values they communicate. This is more important than ever in the digital age because even minor inconsistencies can damage trust.

- Be transparent about why AI is being implemented: Employees and customers alike may wonder what the true purpose is, especially when there are privacy and security concerns. In our survey, 63% of respondents are at least moderately worried about privacy issues, and 67% are worried about cyber security issues. Being upfront about the purpose of the technology goes a long way in building trust.

- Make AI a cultural value-add: As mentioned above, employees are generally positive about AI, as long as it is being used to drive value, not reduce headcount or “spy” on them. Before deploying any new technology, consider how it will impact corporate culture, employee engagement and morale. If you ignore these factors, the negatives will outweigh any positives you might have gained.

For more in-depth discussion of trust and other factors that can impact the success of an AI implementation, download the white paper, Beyond Technology: Preparing People for Success in the Era of AI.